Student Projects

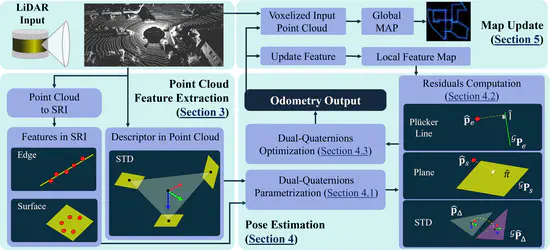

Learning agile flights in clustered environments for quadrotors from pixel information using differentiable simulators and novel sensors

This project focuses on enabling high-speed, agile autonomous aerial robot navigation in visually degraded and environmentally challenging conditions. These include scenarios such as post-earthquake zones, underground exploration, anti-poaching missions, and night-time search and rescue operations where conventional sensors like LiDARs and cameras fail. To address these challenges, the project introduces a novel visuo-sonic sensing suite that combines low-power event cameras and ultrasound sensors, which excel in conditions like darkness, fog, smoke, and transparent environments. Leveraging differentiable physics-based learning, the system adapts control policies in real-time to unconventional sensor inputs, ensuring reliable operation in harsh and dynamically changing environments.

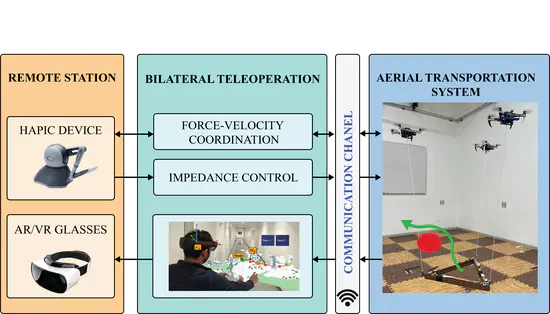

Enhancing Disturbance Rejection in Multi-Aerial Robot Systems with Cable-Suspended Loads

The use of a cable force allocation matrix has proven beneficial for human-payload interaction and for maintaining safe robot separation, without compromising payload trajectory tracking. However, there is a lack of studies on the differential cable force allocation matrix, which could further maximize dynamic manipulability and increase disturbance rejection.

Geometric Impedance Manipulation in Multi-Aerial Robot Systems with Cable-Suspended Loads

This research explores advanced control strategies for human-robot interactions, focusing on a geometric impedance controller on the SE(3) manifold to address the coupling of translational and rotational dynamics in complex motions.